The Power, Potential and Prejudice of AI: for HR practitioners and beyond…

The implementation of responsible and ethical AI, is a critical discussion for the future of:

workplace productivity,

human wellbeing and

social cohesion, and one which benefits greatly from the concepts of “Bothness” and Radical Optimisation.(1, 2)

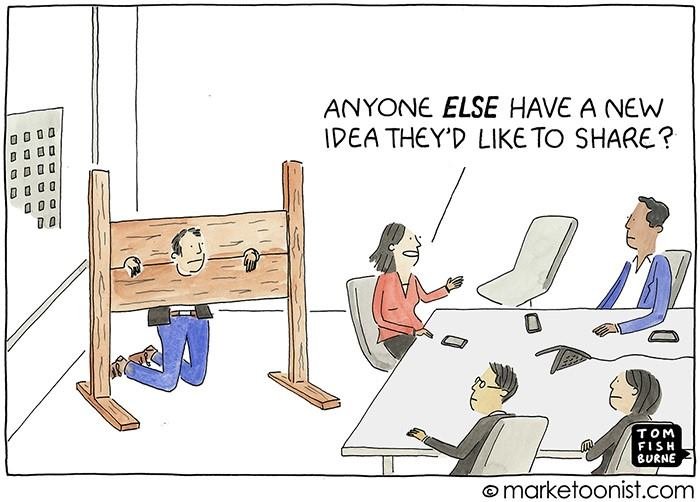

In order to maximise impact and outcomes, it will require the ability to optimise competing priorities, rather than maximising each of the polar extremes. And that will require outcomes to be optimised at an organisational level, which cannot be achieved without a mature degree of connection and collaboration, coordination and calibration between organisation functions and hierarchies. However, this is relatively uncommon in today’s world of siloed priorities and performance payments.

Last week, the Australian HR Institute’s, NSW Diversity and Inclusion Committee hosted 3 global speakers to unpack the impact of AI for HR, not just across recruitment and the whole employee lifecycle, but also across the whole organisation.

How well prepared is HR to manage the risks and maximise the rewards of AI?

Nearly 90% of respondents to our pre-event survey said, their existing HR systems either didn’t have AI embedded or weren’t sure if they did.

70% indicated they either weren’t at all prepared or particularly well prepared, for AI in their organisation.

The Committee’s fears were confirmed, namely the HR profession hadn’t widely understood that AI has been embedded within existing HR systems for some years.(3)

Whilst there’s no doubt AI has extraordinary power and potential to transform organisation performance through its remarkable ability to analyse complex data and generate insights from disparate sources, there’s also a darker side of AI we need to be aware of.

Whilst that may sound dramatic, we know that today’s AI has identity biases embedded, which it has been unconsciously programmed with during its development and continues to reinforce during use.(4, 5)

It’s the reason, responsible organisations such as Amazon, turned off their AI recruitment engine, when they discovered it discriminated against women. However, these biases don’t just exist in recruitment. They exist throughout the whole employee lifecycle and in other functional applications, such as finance. This was ably demonstrated, when the CEO of Apple was awarded a higher credit limit by AI, than his wife, despite having joint bank accounts.

The ACTU clearly articulated the risk / reward dichotomy of this extraordinary technology, albeit somewhat bluntly, in a recent press release:

“[W]orkers are… being hired and fired by algorithm, … and discriminated against by bosses’ bots.’

We need to ensure these risks are eliminated, whilst encouraging the development of technology that uplifts working people and any productivity benefits… are shared…”

Despite AI’s recognised long term impact on jobs, even the ACTU highlights the need to combine and optimise both ‘poles of possibility’, ie. maximise reward and mitigate risk.(4) ie. it’s vital we maximise “Bothness”, for greatest potential in organisations and for society.

Dr Susan David “Bothness”

Bain and Co.’s 2023 research confirms the positive financial impact, (in the order of 2x profit), for organisations implementing “bothness”, ie. responsible and ethical AI. Ethisphere’s benchmarking further confirms the positive, medium term financial impact for organisations with ethical cultures and systems.

These lessons are reminiscent of the recommendations from the Hayne Royal Commission, and in particular the need for robust ‘speak up’, psychologically safe cultures. Whilst many Australian banks invested in systemic and cultural changes in its aftermath, some of the systemic remediations have been recently wound back, placing the focus once again on the importance of culture.(6) In today’s similarly complex context of competing priorities, with substantial social impact, it would be unwise for any organisation to forget those vital cultural lessons.

As we know, however, Psychological Safety will only ever be as strong as the most senior leader allows, which begs the question:

How committed are Leaders to optimising AI for competitive advantage?

A recent survey from MIT confirms only 6% of leaders have developed processes to leverage AI responsibly and ethically. The same survey further confirms, only 44% of frontline team members believe their leadership teams are equipped to manage AI’s ethical issues and risks. Which means 56%, (or greater than half), do not, as yet.(7)

In combination with leaders’ understandable optimism about AI’s impressive benefits, and confidence in their ability to realise tangible value through it’s data insights and productivity gains, it would be wise, not to ignore the view of the frontline, who are usually more familiar with the risks and reality at ground level.

If organisations and Executive Teams are to create optimised outcomes, rather than maximising one priority or another, they will need to afford authentic respect for the value each piece of the jigsaw puzzle contributes to the collective outcomes of the whole organisation.

This will require the need for discipline in the management of speed, to maximise the rewards associated with AI’s extraordinary analytical capabilities, and safety, to accommodate the frontline teams’ (and likely customers’) views of risk readiness. We call this delicate process of optimisation in our organisations, Radical Optimisation.(2, 4)

Radical Optimisation in the Implementation of Responsible and Ethical AI

Radical Optimisation: Travers-Wolf

How can HR optimise the ‘poles of possibility’, for competitive advantage in HR and across the organisation?

A central recommendation in the research and various best practice Governance frameworks, is the importance of partnering Artificial Intelligence with Human Intelligence.(4, 8)

We’ll explore this topic and others further in future articles. In the interim, however, let’s start by tuning in our radars for ‘Bothness Basics’ and positive examples of Radical Optimisation in our organisations.

Where and How do we begin to enable Responsible & Ethical AI?

1. Audit all existing Business Systems (not just HR): for the presence of AI, and understand how AI generates its recommendations.

· Vital elements of trust, are transparency and explainability to understand how decisions are being made by AI in your organisation.(4)

2. Review the Outputs of your Business Systems: across the employee, customer, finance etc… lifecycles.

Check for bias impacting groups with less economic advantage, who are typically under-represented in our workplaces and in the development of AI.

3. Compare the Outputs: from your AI generated and Human Intelligence (HI) systems.

What are the differences? Who is impacted? What are the consequences?

Could AI be exacerbating the talent shortage in your organisation by removing or disadvantaging under-represented talent?

Where might you need to prioritise the AI / HI partnership to ensure fairness for all?

Might there be an opportunity to create higher performing organisations through more robust DEI strategies, and recalibrated AI?

How can we enable productivity and profitability and support trust and social cohesion, at a time it’s greatly needed?(9)

Recapping the Key Learnings

Connection and collaboration, coordination and calibration between organisation silos and hierarchies will be one of the most important elements for the successful implementation of responsible and ethical AI.

This will only be as strong, however, as the respect afforded to each of the competing priorities. ie. AI / HI; Speed / Safety; Risk / Reward.

If organisations seek to maximise rather than optimise outcomes, they will surely find themselves adrift of what today’s society expects from organisations in the achievement of both financial and human impact.

Your Mission, should you choose to accept it…

In the coming weeks, watch out for the “Bothness Basics” in your organisation. How well calibrated is AI and HI; Speed and Safety; Risk and Reward?

What themes do you notice?

We’re grateful to our global experts Dr Muneera Bano, CSIRO, Siobhan Savage, CEO and Co-Founder of Reejig, an audited responsible AI platform and Dr Juliet Bourke, UNSW who generously shared their insights with us!

At I LEAD Consulting we’re on a mission to simplify Diversity and Inclusion for Leaders and Teams.

PRACTICE INCLUSION | EMBRACE DIVERSITY | ACTIVATE ALLIES

References: 1. Susan David; 2. I LEAD Consulting, Travers-Wolf; 3. Bersin; 4. McKinsey; 5. CSIRO; 6. The Australian Financial Review; 7. Udemy; 8. CSIRO, WEF, IBM, UN; 9. Edelman, Scanlon Foundation